Machine Learning As A Service

Our portfolio company Clarifai introduced two powerful new features on their machine learning API yesterday:

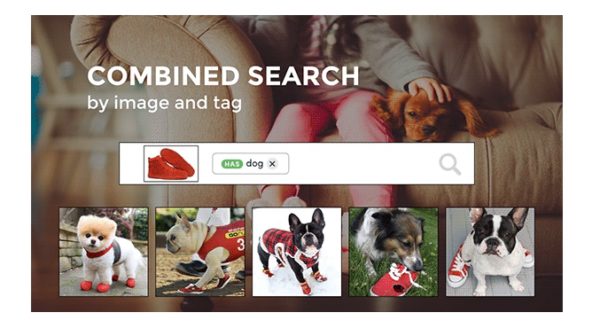

- visual search

- train your own model

Visual search is super cool:

But I am even more excited about the train your own model feature.

Clarifai says it well on their blog post announcing these two new features:

We believe that the same AI technology that gives big tech companies a competitive edge should be available to developers or businesses of any size or budget. That’s why we built our new Custom Training and Visual Search products – to make it easy, quick, and inexpensive for developers and businesses to innovate with AI, go to market faster, and build better user experiences.

Machine learning requires large data sets and skilled engineers to build the technology that can derive “intelligence” from data. Small companies struggle with both. And so without machine learning as a service from companies like Clarifai, the largest tech companies will have a structural advantage over small developers. Using an API like Clarifai allows you to get the benefits of scale collectively without having to have that scale individually.

Being able to customize these machine learning APIs is really the big opening. Clarifai says this about that:

Custom Training allows you to build a Custom Model where you can “teach” AI to understand any concept, whether it’s a logo, product, aesthetic, or Pokemon. Visual Search lets you use these new Custom Models, in conjunction with our existing pre-built models (general, color, food, wedding, travel, NSFW), to browse or search through all your media assets using keyword tags and/or visual similarity.

If you are building or have built a web or mobile service with a lot of image assets and want to get more intelligence out of them, give Clarifai’s API a try. I think you will find it a big help in adding intelligence to your service.

Comments (Archived):

“The Cambrian explosion was triggered by the evolution of vision. Visual intelligence is a key aspect of general intelligence.”” — Dr Fei Fei Li, Director of Stanford AI Lab and Chief Scientist at A16Z.She also notes that the amount of variants in the real world can create a lot of problems for artificial intelligence. She provided examples of the machines mistaking a toothbrush for a baseball bat or a bearded man for a teddy bear and cats in unusual poses not being identifiable.https://uploads.disquscdn.c…Also, maybe the issues of image biases will disappear with more representative training data:* http://qz.com/774588/artifi…https://uploads.disquscdn.c…Microsoft and Baidu have now also released their Machine Learning as a Service frameworks.

That cat picture – seems torturous!

Bodacious body-dexterity!

The head line of the article implies that there is bias. But that may not be the case.Beauty is in part about symmetry. The use of makeup allows for better contrast, symmetry and definition of key facial details. As such someone with white skin would have an advantage in any algorithm over someone with dark skin, all else equal. [1] [2] Look at the eyes of the black girl vs. the white girl. The eyes stand out more with white skin.I am wondering if clothes are taken into account as well (meaning the figure of the person). As such clothing which didn’t accentuate a figure would put someone at a disadvantage. Just like being heavy does in some cases. Or wearing a “moo moo”.[1] I’ve noted this as well even with white women who get to much of a tan.[2] To much makeup though, at least to me, takes away from beauty. So does anything that isn’t genuine (like fake breasts fwiw.)

In the sample set for beauty.ai they isolated the pixel frames around the face and its features only. They asked people to submit photos that were as au naturel as possible.Here’s Florence Colgate who was deemed by British scientists to have the most symmetrical face.And here too is the composite and artist did of the Top 10 “Most Beautiful Women”.Beauty’s subjective and chemical and goes deeper than the “Golden Ratio” proportions.https://uploads.disquscdn.c…https://uploads.disquscdn.c…https://uploads.disquscdn.c….

That sounds super useful to developers. I’d love to find a demo of the search for consumers.

Amy Liu has a video here:* http://blog.clarifai.com/tr…It’s at 4 mins 30 secs in.Not sure the first 4 minutes is needed.

Thanks. Is there a web link to try it?

It seems the consumer will have to wait and see what apps devs make.I totally get it. At Deep Learning Summit in Jan, Sentient Technologies gave a public demo of visual search with AI like so:* https://www.youtube.com/wat…

You can check out our UI at preview.clarifai.com. We’ve loaded it up with a few demo collections for you to use

Thanks!

DevKit says: “You can send up to 128 images in one API call” and they’re giving 50,000 free API credits per month for a limited time.So does that mean 1785 free API calls? https://uploads.disquscdn.c…

Hey, Jason from Clarifai here. To clarify (har har), we’re giving away 50,000 API calls

Ah, thanks.So I’m guessing tagging annotation will allow for:[‘tree_no_leaves’, ‘dewdrops_plus_sunshine’]Also now wondering how this search operation works for dependent concept pairs:”Show all trees without leaves and sunshine but no dewdrops.”

Yep you can add annotations and create models where tags are mutually exclusive, or not.On the search side, we do allow some logical queries. The docs provide more info than I could here:https://developer-preview.c…

Yup:# search by multiple concepts with not logicapp.inputs.search_by_predicted_concepts(concepts=[‘cat’, ‘dog’], values=[True, False])

I will email you shortly since you overlap with the work I’m doing now.

@jason novackTo better understand your pricing, a unit is a single image with one tag request. Multiple tag requests on the same image means multiple units.An API call can handle 128 units per call, so for example if there were 10 tag requests per image, that’s 1,280 units consumed in an API call.If it’s $479/month for 250,000 images (units), for 24 FPS video it looks pretty expensive to provide image analysis as a service, or is there a misunderstanding of your pricing?Thanks.

A unit is passing one image to our /tag endpoint, which will return the output of the model you chose and will contain multiple tags that apply to the image (our “general” model returns 20 tags by default). You can try our demo at clarifai.com/demo to see what I mean.When we say that a call can handle 128 units, we mean that you can bath process up to 128 images with a single call, and each one will cost one unit.So for the $479 plan, you are effectively paying $0.001916 to generate up to 20 relevant tags for one image.

For video, we process 1 FPS by default (regardless of the actual frame rate), and can change that on request.

Love what Clarifai is doing

Meanwhile, in other AI news today … Google released its Neural Machine Translation framework which uses sequence-2-sequence methods to literally translate between languages.Techcrunch’s “Advancing the art by removing the art” reflects how Google treats Natural Language purely as a science rather than as an art with expressions of emotions.https://uploads.disquscdn.c…

This is awesome! Love Clarifai and what they are doing with Machine Learning and vision.The capabilities of creating custom models is one of the most disrupting forces of AI. The more people is able to use this technologies the more democratized AI will be. We need more companies like this!BTW at MonkeyLearn we share the same vision, and also offer custom model creation but for text instead of vision. Would love for this community to try it out and tell us what you think.

The name Clarifai is triggering me. I’m not sure why.

I am thinking ClearAi.com. Clear is a word. AI is a concept. It’s owned interestingly by an allergy doctor. Clarifai to me seems like an allergy drug.

ClearAi is MUCH better. Clarifai is totally an allergy drug!, nice.

https://uploads.disquscdn.c…@le_on_avc:disqus – My goto when hiking during high pollen.

Clairifai continues to rock. Very cool to see more people coming out with offerings like this. IBM has a similar trainable object recognition API here: http://www.ibm.com/watson/d….While these vendors do all the heavy lifting as far as AI goes, one of the hardest things for customer who want to use their services is building the dataset to train the model. That’s where we come in at Spare5!

cool that they are doing this for SMBs. Will be interesting to see how scrappy SMBs are. Very competitive now and almost impossible to beat incumbent corporations who not only are huge with regard to scale and scope-but also can spend a lot of time, money, resources on regulatory capture to crush competitors.One of the blockchain’s biggest promises is the transfer of power from companies like Facebook (who make money off the data we give them freely-without giving us true monetary value) to the people.

Industrial applications for machine learning: http://mlaas.com/

Photonics sensors are also used in food manufacturing. Misshapen fruit so the light hits it differently or chocolate surfaces that aren’t “smooth” get ejected and dumped.

This is pretty cool. I did a test with two random images that I found.In one images it hits everything but “smile”. In the other image it hits most everything but “tongue”.It also marks one as NSFW… https://uploads.disquscdn.c… https://uploads.disquscdn.c…

Well, it’s doing better than Tensorflow and Imagenet concept hierarchy which had Elon Musk tagged and id’d as a sweatshirt. https://uploads.disquscdn.c…

tensorflow needs more tuning, but when it works, it worksshawn still prefers torch

Yep, Torch is still better. Turi which Apple recently acquired is 3 times faster than Tensorflow.

Thanks for sharing this! Indeed very cool and timing as well. As someone has been working in ad tech/predictive analytics, image recognition for brand protection purpose has been a challenge. I will def give Clarifai a try. Possibly reach out to the team as well.

What’s the sales and marketing approach for clarifi?PS Naming a company something that is spell corrected has its issues.

OOOH

and in other news I filled a glass vase with freshly dropped conkers (horse chestnut tree) today. Looks good on top of the book shelves.I wonder if Clarifai would recognise a conker to know what it is?

Part of me wants to know if this makes tuning a problem, because you are not directly specifying multiple parts of the AI

Wicked.

Except AI’s not magic, it’s maths.Doing prediction and functional ordered commands with maths, given a data set, has been around for 250+ years and the invention of statistics methods in combination with probability (around since 1654).It’s when we get to the issues of Natural Language understanding where existing maths frameworks (all the way back to 70,000 BC and geometric patterns in cave paintings to the Sumerians circa 3000BC inventing numeral system) are inadequate.Trying to teach the broom to speak with and understand us as if it’s human is just about the hardest challenge in human history.Because it involves so many disciplines: neuroscience, maths, linguistics, computing, psychology, economics, biology, anthropology, art and more.